ResNet参数

import torch

import torchvision.models as models

import torchvision.transforms as transforms

from PIL import Image

import inspect

# 加载预训练的 ResNet-18 模型

model = models.resnet18(weights=models.ResNet18_Weights.DEFAULT)

model.eval() # 设置为评估模式

print("ResNet-18 文档:")

print(inspect.getdoc(models.resnet18))

print("\n" + "="*60 + "\n")

# 分析每一层的参数量

def analyze_resnet18_params(model):

total_params = 0

print(f"{'Layer Name':<25} {'Parameters':<12} {'Shape':<30} {'Description':<20}")

print("-" * 90)

# 卷积层1 (初始卷积)

conv1 = model.conv1

conv1_params = sum(p.numel() for p in conv1.parameters())

total_params += conv1_params

print(f"{'conv1':<25} {conv1_params:<12} {str(tuple(conv1.weight.shape)):<30} {'初始卷积层'}")

# BN层1

bn1 = model.bn1

bn1_params = sum(p.numel() for p in bn1.parameters())

total_params += bn1_params

print(f"{'bn1':<25} {bn1_params:<12} {str(tuple(bn1.weight.shape)):<30} {'批归一化层'}")

# 残差块

layer_names = ['layer1', 'layer2', 'layer3', 'layer4']

layer_descriptions = ['残差块1(2个基础块)', '残差块2(2个基础块)', '残差块3(2个基础块)', '残差块4(2个基础块)']

for i, (layer_name, desc) in enumerate(zip(layer_names, layer_descriptions)):

layer = getattr(model, layer_name)

layer_params = 0

# 每个layer包含多个BasicBlock

for j, block in enumerate(layer):

block_params = sum(p.numel() for p in block.parameters())

layer_params += block_params

total_params += block_params

print(f"{f'{layer_name}[{j}]':<25} {block_params:<12} {'-':<30} {f'第{i+1}层第{j+1}个基础块'}")

print(f"{f'{layer_name}_total':<25} {layer_params:<12} {'-':<30} {desc}")

# 全连接层

fc = model.fc

fc_params = sum(p.numel() for p in fc.parameters())

total_params += fc_params

print(f"{'fc':<25} {fc_params:<12} {str(tuple(fc.weight.shape)):<30} {'全连接分类层'}")

print("-" * 90)

print(f"{'TOTAL':<25} {total_params:<12} {'-':<30} {'总参数量'}")

return total_params

# 执行分析

total_params = analyze_resnet18_params(model)

print("\n" + "="*60)

print("ResNet-18 结构解释:")

print("="*60)

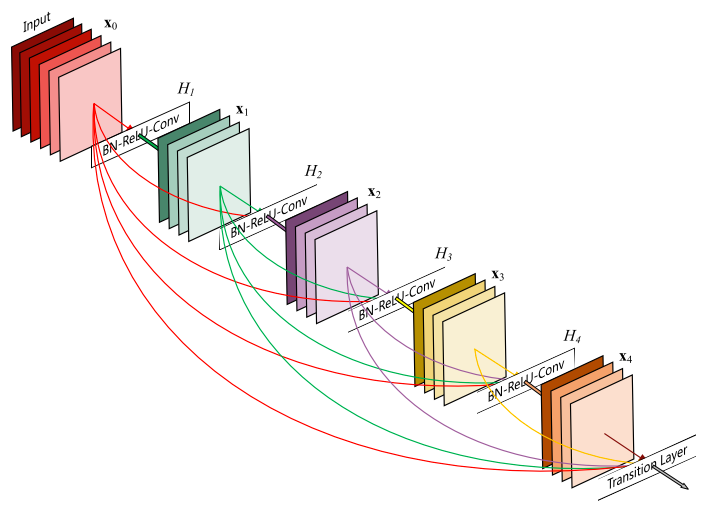

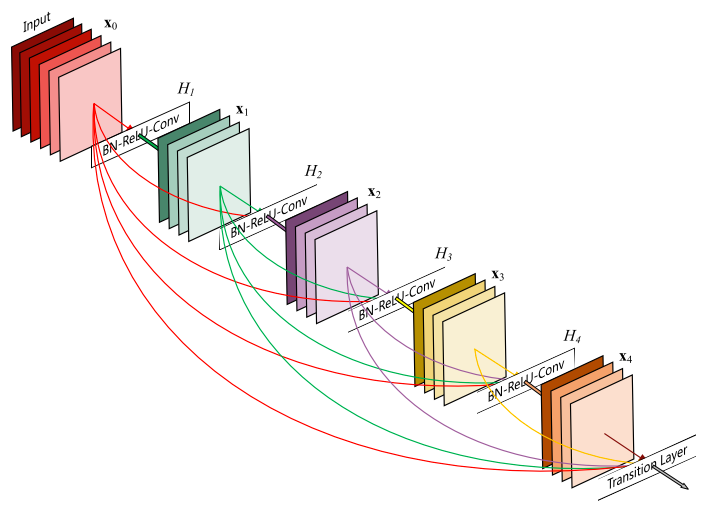

print("\n1. 网络结构概述:")

print(" - ResNet-18 包含 18 个带权重的层(卷积层+全连接层)")

print(" - 使用残差连接解决深层网络梯度消失问题")

print(" - 总参数量约为 11.7M")

print("\n2. 主要组件说明:")

print(" - conv1: 初始卷积层,7x7卷积核,提取基础特征")

print(" - bn1: 批归一化层,加速训练并提高稳定性")

print(" - layer1-4: 4个残差块,每个包含2个基础块(BasicBlock)")

print(" - 每个BasicBlock: 包含2个3x3卷积层和残差连接")

print(" - fc: 全连接层,将特征映射到1000个分类类别")

print("\n3. 参数量分布特点:")

print(" - 大部分参数集中在全连接层(fc)")

print(" - 卷积层参数相对较少但计算量大")

print(" - 使用3x3小卷积核减少参数量")

print(" - 残差连接不增加额外参数")

print("\n4. 设计优势:")

print(" - 残差结构使网络可以很深(18层)而不会梯度消失")

print(" - 批量归一化提高训练稳定性")

print(" - 全局平均池化减少全连接层参数")

运行结果

ResNet-18 文档:

ResNet-18 from `Deep Residual Learning for Image Recognition `__.

Args:

weights (:class:`~torchvision.models.ResNet18_Weights`, optional): The

pretrained weights to use. See

:class:`~torchvision.models.ResNet18_Weights` below for

more details, and possible values. By default, no pre-trained

weights are used.

progress (bool, optional): If True, displays a progress bar of the

download to stderr. Default is True.

**kwargs: parameters passed to the ``torchvision.models.resnet.ResNet``

base class. Please refer to the `source code

`_

for more details about this class.

.. autoclass:: torchvision.models.ResNet18_Weights

:members:

============================================================

Layer Name Parameters Shape Description

------------------------------------------------------------------------------------------

conv1 9408 (64, 3, 7, 7) 初始卷积层

bn1 128 (64,) 批归一化层

layer1[0] 73984 - 第1层第1个基础块

layer1[1] 73984 - 第1层第2个基础块

layer1_total 147968 - 残差块1(2个基础块)

layer2[0] 230144 - 第2层第1个基础块

layer2[1] 295424 - 第2层第2个基础块

layer2_total 525568 - 残差块2(2个基础块)

layer3[0] 919040 - 第3层第1个基础块

layer3[1] 1180672 - 第3层第2个基础块

layer3_total 2099712 - 残差块3(2个基础块)

layer4[0] 3673088 - 第4层第1个基础块

layer4[1] 4720640 - 第4层第2个基础块

layer4_total 8393728 - 残差块4(2个基础块)

fc 513000 (1000, 512) 全连接分类层

------------------------------------------------------------------------------------------

TOTAL 11689512 - 总参数量

============================================================

ResNet-18 结构解释:

============================================================

1. 网络结构概述:

- ResNet-18 包含 18 个带权重的层(卷积层+全连接层)

- 使用残差连接解决深层网络梯度消失问题

- 总参数量约为 11.7M

2. 主要组件说明:

- conv1: 初始卷积层,7x7卷积核,提取基础特征

- bn1: 批归一化层,加速训练并提高稳定性

- layer1-4: 4个残差块,每个包含2个基础块(BasicBlock)

- 每个BasicBlock: 包含2个3x3卷积层和残差连接

- fc: 全连接层,将特征映射到1000个分类类别

3. 参数量分布特点:

- 大部分参数集中在全连接层(fc)

- 卷积层参数相对较少但计算量大

- 使用3x3小卷积核减少参数量

- 残差连接不增加额外参数

4. 设计优势:

- 残差结构使网络可以很深(18层)而不会梯度消失

- 批量归一化提高训练稳定性

- 全局平均池化减少全连接层参数