Python激活函数

import torch

import torch.nn as nn

import torch.nn.functional as F

# 定义LeNet-5网络结构(修正版)

class LeNet5(nn.Module):

def __init__(self, num_classes=10):

super(LeNet5, self).__init__()

# 特征提取部分

self.conv1 = nn.Conv2d(1, 6, kernel_size=5, stride=1) # 输入1通道,输出6通道

self.conv2 = nn.Conv2d(6, 16, kernel_size=5, stride=1) # 输入6通道,输出16通道

# 分类器部分 - 修正为正确的16*5*5=400

self.fc1 = nn.Linear(16 * 5 * 5, 120) # 修正为400

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, num_classes)

def forward(self, x):

# 第一层:卷积 + ReLU + 平均池化

x = F.relu(self.conv1(x))

x = F.avg_pool2d(x, kernel_size=2, stride=2)

# 第二层:卷积 + ReLU + 平均池化

x = F.relu(self.conv2(x))

x = F.avg_pool2d(x, kernel_size=2, stride=2)

# 展平并连接全连接层 - 修正为正确的16*5*5=400

x = x.view(-1, 16 * 5 * 5) # 修正为400

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

# 创建LeNet-5模型

model = LeNet5()

model.eval()

print("LeNet-5 网络结构分析(修正版)")

print("=" * 80)

# 分析每一层的参数量

def analyze_lenet5_params(model):

total_params = 0

print(f"{'Layer Name':<15} {'Type':<15} {'Parameters':<12} {'Shape':<25} {'Description':<20}")

print("-" * 90)

# 分析卷积层

layers = [

('conv1', model.conv1, '卷积层1 (5x5)'),

('conv2', model.conv2, '卷积层2 (5x5)'),

('fc1', model.fc1, '全连接层1 (120)'),

('fc2', model.fc2, '全连接层2 (84)'),

('fc3', model.fc3, '输出层 (10)')

]

for name, layer, desc in layers:

layer_params = sum(p.numel() for p in layer.parameters())

total_params += layer_params

if hasattr(layer, 'weight'):

weight_shape = tuple(layer.weight.shape)

else:

weight_shape = '-'

print(f"{name:<15} {type(layer).__name__:<15} {layer_params:<12} {str(weight_shape):<25} {desc}")

print("-" * 90)

print(f"{'TOTAL':<15} {'-':<15} {total_params:<12} {'-':<25} {'总参数量'}")

return total_params

# 执行分析

total_params = analyze_lenet5_params(model)

print("\n" + "="*60)

print("LeNet-5 结构详细解释:")

print("="*60)

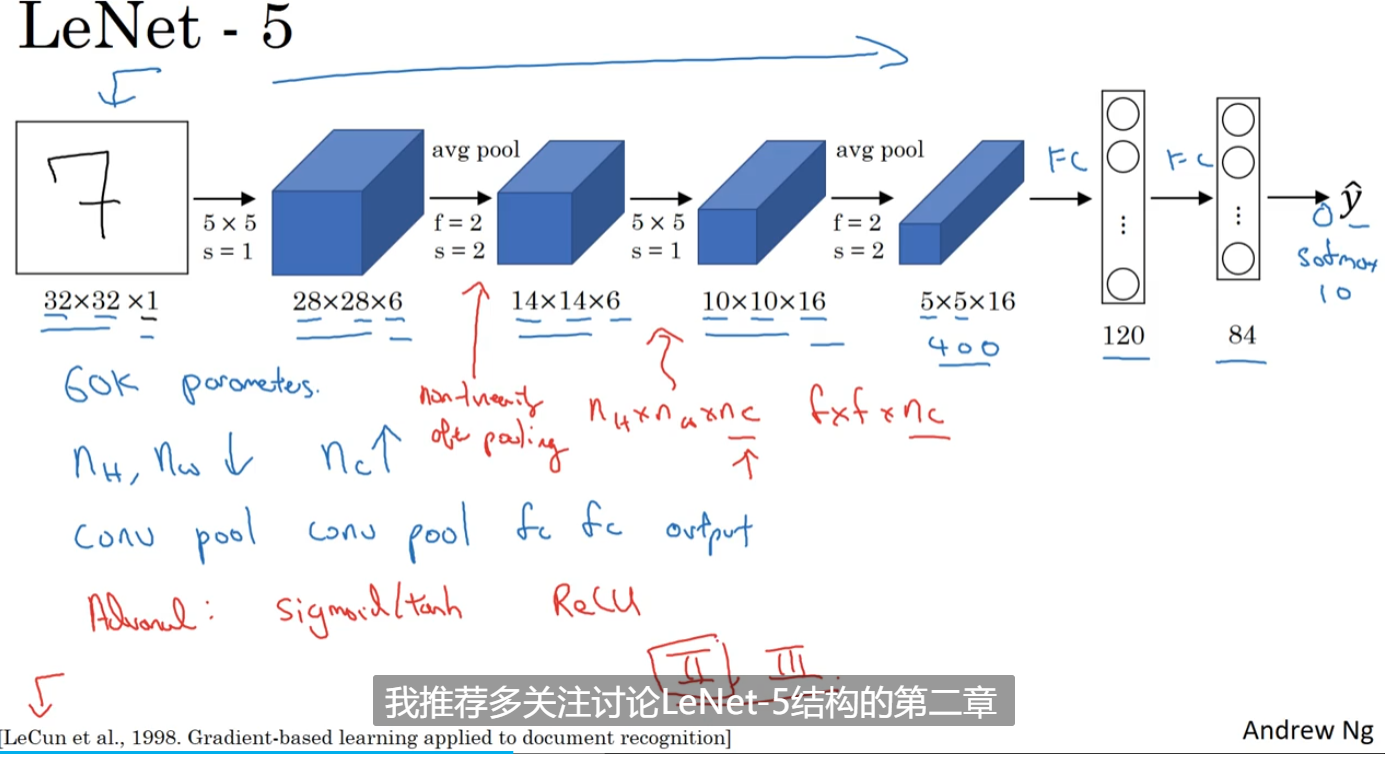

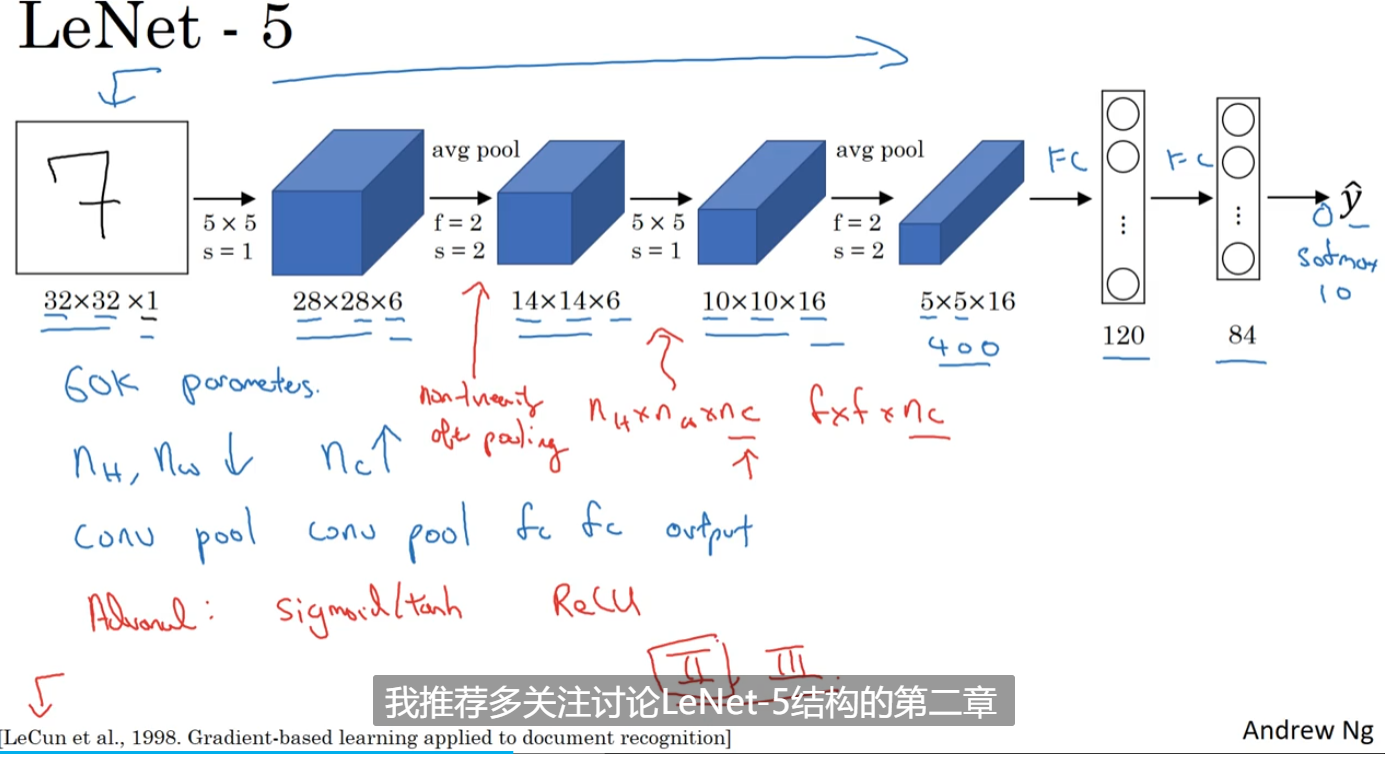

print("\n1. 网络结构概述:")

print(" - LeNet-5 包含 5 个带权重的层(2个卷积层 + 3个全连接层)")

print(f" - 总参数量约为 {total_params:,}")

print(" - 设计用于手写数字识别(MNIST数据集)")

print("\n2. 各层详细说明:")

print(" - 输入: 32×32×1 的灰度图像")

print(" - conv1: 输入1通道, 输出6通道, 5x5卷积核, 无填充")

print(" 输出尺寸: (32-5)/1 + 1 = 28×28×6")

print(" 参数计算: (5×5×1 + 1)×6 = 156")

print(" - 池化层1: 2×2平均池化, 步长2")

print(" 输出尺寸: 28/2 = 14×14×6")

print(" - conv2: 输入6通道, 输出16通道, 5x5卷积核, 无填充")

print(" 输出尺寸: (14-5)/1 + 1 = 10×10×16")

print(" 参数计算: (5×5×6 + 1)×16 = 2,416")

print(" - 池化层2: 2×2平均池化, 步长2")

print(" 输出尺寸: 10/2 = 5×5×16")

print(" - fc1: 输入400(5×5×16), 输出120")

print(" 参数计算: (400 + 1)×120 = 48,120")

print(" - fc2: 输入120, 输出84")

print(" 参数计算: (120 + 1)×84 = 10,164")

print(" - fc3: 输入84, 输出10")

print(" 参数计算: (84 + 1)×10 = 850")

print("\n3. 参数量分布特点:")

conv_params = sum(p.numel() for p in [model.conv1, model.conv2] for p in p.parameters())

fc_params = total_params - conv_params

print(f" - 卷积层参数: {conv_params} ({conv_params/total_params*100:.1f}%)")

print(f" - 全连接层参数: {fc_params} ({fc_params/total_params*100:.1f}%)")

print(f" - fc1层参数最多: 48,120 ({48120/total_params*100:.1f}%)")

print("\n4. 设计优缺点:")

print(" - 优点: 结构简单,参数少,训练快")

print(" - 缺点: 网络较浅,特征提取能力有限")

print(" - 适用: 简单图像分类任务,如手写数字识别")

# 验证参数计算

print("\n" + "="*60)

print("参数计算验证:")

print("="*60)

def verify_params():

# conv1: (in_channels × kernel_h × kernel_w + 1) × out_channels

conv1_calc = (1 * 5 * 5 + 1) * 6

print(f"conv1计算: (1×5×5 + 1)×6 = {conv1_calc}")

# conv2: (in_channels × kernel_h × kernel_w + 1) × out_channels

conv2_calc = (6 * 5 * 5 + 1) * 16

print(f"conv2计算: (6×5×5 + 1)×16 = {conv2_calc}")

# fc1: (in_features + 1) × out_features

fc1_calc = (16 * 5 * 5 + 1) * 120 # 16*5*5=400

print(f"fc1计算: (16×5×5 + 1)×120 = ({16*5*5} + 1)×120 = {fc1_calc}")

# fc2: (in_features + 1) × out_features

fc2_calc = (120 + 1) * 84

print(f"fc2计算: (120 + 1)×84 = {fc2_calc}")

# fc3: (in_features + 1) × out_features

fc3_calc = (84 + 1) * 10

print(f"fc3计算: (84 + 1)×10 = {fc3_calc}")

total_calc = conv1_calc + conv2_calc + fc1_calc + fc2_calc + fc3_calc

print(f"总参数计算: {total_calc}")

print(f"实际总参数: {total_params}")

print(f"验证结果: {'通过' if total_calc == total_params else '不通过'}")

verify_params()

# 测试网络前向传播

print("\n" + "="*60)

print("网络前向传播测试:")

print("="*60)

# 创建测试输入 (batch_size=1, channels=1, height=32, width=32)

test_input = torch.randn(1, 1, 32, 32)

print(f"输入形状: {test_input.shape}")

# 手动计算各层输出形状以验证

def calculate_feature_sizes():

# conv1: (32-5)/1 + 1 = 28

conv1_out = (32 - 5) // 1 + 1

pool1_out = conv1_out // 2 # 28/2 = 14

# conv2: (14-5)/1 + 1 = 10

conv2_out = (pool1_out - 5) // 1 + 1

pool2_out = conv2_out // 2 # 10/2 = 5

fc_input = 16 * pool2_out * pool2_out # 16*5*5=400

print(f"各层输出尺寸计算:")

print(f" conv1输出: {conv1_out}×{conv1_out}×6")

print(f" pool1输出: {pool1_out}×{pool1_out}×6")

print(f" conv2输出: {conv2_out}×{conv2_out}×16")

print(f" pool2输出: {pool2_out}×{pool2_out}×16")

print(f" fc输入维度: {fc_input}")

calculate_feature_sizes()

# 实际前向传播

print("\n实际前向传播:")

with torch.no_grad():

x = test_input

print(f"输入: {x.shape}")

x = F.relu(model.conv1(x))

print(f"conv1输出: {x.shape}")

x = F.avg_pool2d(x, kernel_size=2, stride=2)

print(f"pool1输出: {x.shape}")

x = F.relu(model.conv2(x))

print(f"conv2输出: {x.shape}")

x = F.avg_pool2d(x, kernel_size=2, stride=2)

print(f"pool2输出: {x.shape}")

# 检查展平前的尺寸

batch_size, channels, height, width = x.shape

print(f"展平前: batch={batch_size}, channels={channels}, height={height}, width={width}")

print(f"展平后维度: {channels * height * width}")

x = x.view(-1, 16 * 5 * 5)

print(f"展平后: {x.shape}")

x = F.relu(model.fc1(x))

print(f"fc1输出: {x.shape}")

x = F.relu(model.fc2(x))

print(f"fc2输出: {x.shape}")

x = model.fc3(x)

print(f"fc3输出: {x.shape}")

output = x

print(f"\n最终输出形状: {output.shape}")

print(f"预测类别数: {output.shape[1]}")

运行结果

LeNet-5 网络结构分析(修正版)

================================================================================

Layer Name Type Parameters Shape Description

------------------------------------------------------------------------------------------

conv1 Conv2d 156 (6, 1, 5, 5) 卷积层1 (5x5)

conv2 Conv2d 2416 (16, 6, 5, 5) 卷积层2 (5x5)

fc1 Linear 48120 (120, 400) 全连接层1 (120)

fc2 Linear 10164 (84, 120) 全连接层2 (84)

fc3 Linear 850 (10, 84) 输出层 (10)

------------------------------------------------------------------------------------------

TOTAL - 61706 - 总参数量

============================================================

LeNet-5 结构详细解释:

============================================================

1. 网络结构概述:

- LeNet-5 包含 5 个带权重的层(2个卷积层 + 3个全连接层)

- 总参数量约为 61,706

- 设计用于手写数字识别(MNIST数据集)

2. 各层详细说明:

- 输入: 32×32×1 的灰度图像

- conv1: 输入1通道, 输出6通道, 5x5卷积核, 无填充

输出尺寸: (32-5)/1 + 1 = 28×28×6

参数计算: (5×5×1 + 1)×6 = 156

- 池化层1: 2×2平均池化, 步长2

输出尺寸: 28/2 = 14×14×6

- conv2: 输入6通道, 输出16通道, 5x5卷积核, 无填充

输出尺寸: (14-5)/1 + 1 = 10×10×16

参数计算: (5×5×6 + 1)×16 = 2,416

- 池化层2: 2×2平均池化, 步长2

输出尺寸: 10/2 = 5×5×16

- fc1: 输入400(5×5×16), 输出120

参数计算: (400 + 1)×120 = 48,120

- fc2: 输入120, 输出84

参数计算: (120 + 1)×84 = 10,164

- fc3: 输入84, 输出10

参数计算: (84 + 1)×10 = 850

3. 参数量分布特点:

- 卷积层参数: 2572 (4.2%)

- 全连接层参数: 59134 (95.8%)

- fc1层参数最多: 48,120 (78.0%)

4. 设计优缺点:

- 优点: 结构简单,参数少,训练快

- 缺点: 网络较浅,特征提取能力有限

- 适用: 简单图像分类任务,如手写数字识别

============================================================

参数计算验证:

============================================================

conv1计算: (1×5×5 + 1)×6 = 156

conv2计算: (6×5×5 + 1)×16 = 2416

fc1计算: (16×5×5 + 1)×120 = (400 + 1)×120 = 48120

fc2计算: (120 + 1)×84 = 10164

fc3计算: (84 + 1)×10 = 850

总参数计算: 61706

实际总参数: 61706

验证结果: 通过

============================================================

网络前向传播测试:

============================================================

输入形状: torch.Size([1, 1, 32, 32])

各层输出尺寸计算:

conv1输出: 28×28×6

pool1输出: 14×14×6

conv2输出: 10×10×16

pool2输出: 5×5×16

fc输入维度: 400

实际前向传播:

输入: torch.Size([1, 1, 32, 32])

conv1输出: torch.Size([1, 6, 28, 28])

pool1输出: torch.Size([1, 6, 14, 14])

conv2输出: torch.Size([1, 16, 10, 10])

pool2输出: torch.Size([1, 16, 5, 5])

展平前: batch=1, channels=16, height=5, width=5

展平后维度: 400

展平后: torch.Size([1, 400])

fc1输出: torch.Size([1, 120])

fc2输出: torch.Size([1, 84])

fc3输出: torch.Size([1, 10])

最终输出形状: torch.Size([1, 10])

预测类别数: 10